How to Avoid Detection by AI Text Detectors with the Help of Undetectable AI

Advancements in artificial intelligence (AI) have revolutionized various industries, including cybersecurity and text detection. AI text detectors are powerful tools used to analyze and identify text patterns, allowing organizations to detect potential threats, spam, or inappropriate content. However, some individuals may seek to avoid detection by these AI text detectors for various reasons, leading to an emergence of undetectable AI tools.

In this article, we will explore the topic of avoiding detection by AI text detectors with the help of Undetectable Al. We’ll examine the reasons why individuals might want to bypass these detectors, the methods employed by AI text detectors, and the rise of undetectable AI tools. Our aim is to provide a balanced discussion of the subject and shed light on the potential implications for privacy, security, and the future of text detection.

The Role of AI Text Detectors

Before delving into avoiding detection, it’s crucial to understand the role of AI text detectors and their significance in today’s digital landscape. AI text detectors are designed to analyze large volumes of text data, such as emails, social media posts, chat conversations, and documents, in order to identify patterns and make determinations based on predefined criteria.

These AI-powered detectors are widely used for various purposes, ranging from identifying spam emails and filtering inappropriate content to detecting fake news and monitoring online conversations for potential threats. By relying on machine learning algorithms, AI text detectors can continuously adapt and improve their ability to accurately analyze and flag suspicious or undesirable content.

Reasons for Avoiding Detection

While AI text detectors serve as valuable tools for many organizations and individuals, there are genuine reasons why people might seek to avoid detection. Some of these reasons include:

Privacy Concerns

Privacy is a fundamental right in today’s digital age, and individuals may have legitimate concerns about their personal information being analyzed or monitored without consent. Whether it’s a confidential email conversation, private messaging, or personal documents, the ability for AI text detectors to analyze and interpret these texts can be seen as an invasion of privacy.

Circumventing Censorship

In certain regions or under oppressive regimes, individuals might need to communicate or share information discreetly due to censorship or government surveillance. By evading the detection capabilities of AI text detectors, individuals can potentially bypass censorship and share critical information without fear of repercussions.

Gaming the System

Some individuals try to manipulate online platforms for personal gain, be it for gaming, marketing, or other purposes. By avoiding detection, these individuals can take advantage of the system’s vulnerabilities and bypass restrictions put in place by content moderators or platform algorithms.

Understanding AI Text Detection Methods

To effectively avoid detection, one must have an understanding of how AI text detectors operate. These systems employ a range of methods and techniques to examine and interpret text data. Here are some key detection methods commonly used by AI text detectors:

Keyword Matching

One of the simplest and most straightforward methods used by AI text detectors is keyword matching. These detectors are programmed to search for specific words or phrases that indicate a particular type of content or behavior. For example, detecting words related to drugs, violence, or explicit content can help identify potentially harmful or inappropriate text.

Natural Language Processing (NLP)

Natural Language Processing (NLP) techniques are employed by AI text detectors to analyze text beyond individual keywords. NLP enables the detection of patterns, sentiment analysis, and contextual understanding of text data. This approach allows the detectors to identify content that may be deceptive, misleading, or potentially harmful.

Machine Learning Algorithms

Machine learning algorithms play a significant role in the evolution of AI text detectors. These algorithms enable the detectors to learn from labeled training data and make predictions based on the patterns and characteristics of the data. As new data is analyzed, the detectors can continuously improve their accuracy and capability to identify patterns not explicitly defined in the training phase.

Learn more about making AI content undetectable by visiting this page.

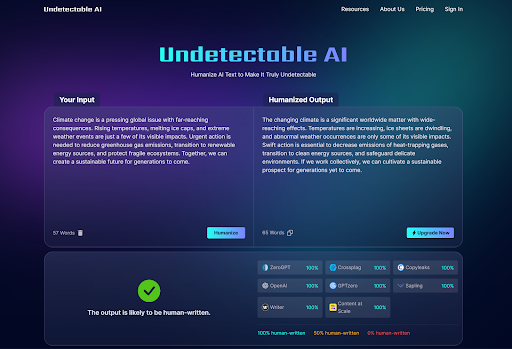

The Emergence of Undetectable AI Tools

With the increasing demand for privacy and the desire to avoid detection by AI text detectors, developers have been exploring the creation of undetectable AI tools. These tools aim to outsmart or bypass the detection mechanisms employed by AI text detectors, raising concerns about the potential misuse or abuse of such technology.

Undetectable AI tools take advantage of several techniques to avoid detection, leaving AI text detectors none the wiser. Some of these techniques include:

Obfuscation

Obfuscation is a technique employed by undetectable AI tools to alter the structure or content of texts in a way that makes it difficult for AI text detectors to interpret the information correctly. This can involve changing word spellings, introducing synonyms, or rephrasing sentences while maintaining the intended meaning.

Randomization

Undetectable AI tools may also use randomization techniques to vary the text patterns, making it challenging for AI text detectors to identify consistent patterns or signatures. By introducing randomness, these tools aim to confuse the detectors and prevent accurate classification.

GAN (Generative Adversarial Network) Based Approaches

Generative Adversarial Networks (GANs) are another technique utilized by undetectable AI tools. GANs consist of two neural networks: a generator and a discriminator. The generator creates text samples, while the discriminator tries to distinguish between real and generated texts. Through an adversarial training process, undetectable AI tools can generate sophisticated and realistic text that can bypass detection algorithms.

Implications and Ethical Considerations

The rise of undetectable AI tools raises several implications and ethical considerations. It is important to critically examine the potential consequences of using such tools and understand their impact on privacy, security, and the overall functioning of digital platforms.

Erosion of Trust and Content Moderation Challenges

If undetectable AI tools gain widespread adoption, it could erode trust in digital platforms and content moderation systems. Platforms that rely on AI text detectors for spam prevention, hate speech identification, or content filtering might struggle to maintain the quality and safety of their platforms. This could result in an increased dissemination of harmful or inappropriate content, impacting users’ experience and platform reputation.

Legal and Regulatory Considerations

The emergence of undetectable AI tools also poses legal and regulatory challenges. Existing laws and regulations surrounding privacy, data protection, and content moderation might need to be revisited to address the potential risks posed by such tools. Striking a balance between individual privacy rights and the need for effective content moderation is a complex task that requires careful consideration.

Ensuring Responsible Use of AI Technology

As with any technology, the responsible and ethical use of undetectable AI tools is crucial. Developers, organizations, and users must be mindful of the potential consequences and ensure they are not violating laws or infringing upon the rights of others. Responsible use involves considering the broader impact on society and taking proactive measures to avoid misuse or harm.

Conclusion

Undetectable AI tools present a significant challenge to the role and effectiveness of AI text detectors. As these tools continue to evolve, the tension between privacy, security, and the need for content moderation will persist. Striking a balance that respects individuals’ privacy rights while ensuring platforms remain safe and trustworthy is an ongoing task that requires collective effort.

While undetectable AI tools may provide individuals with ways to avoid detection, it is essential to consider the broader implications and ethical considerations surrounding their use. The responsible development, use, and regulation of AI technology are crucial to navigate these challenges effectively.

As we move forward in the digital age, it is imperative to engage in open and transparent conversations about the impact of undetectable AI tools, shaping policies and regulations that protect privacy, enhance security, and maintain the integrity of digital platforms. Only through careful consideration and forward-thinking can we strike the right balance and ensure technology serves the greater good.